What you need to know about AI ethics

Public Relations Society of America issues guidance on using artificial intelligence.

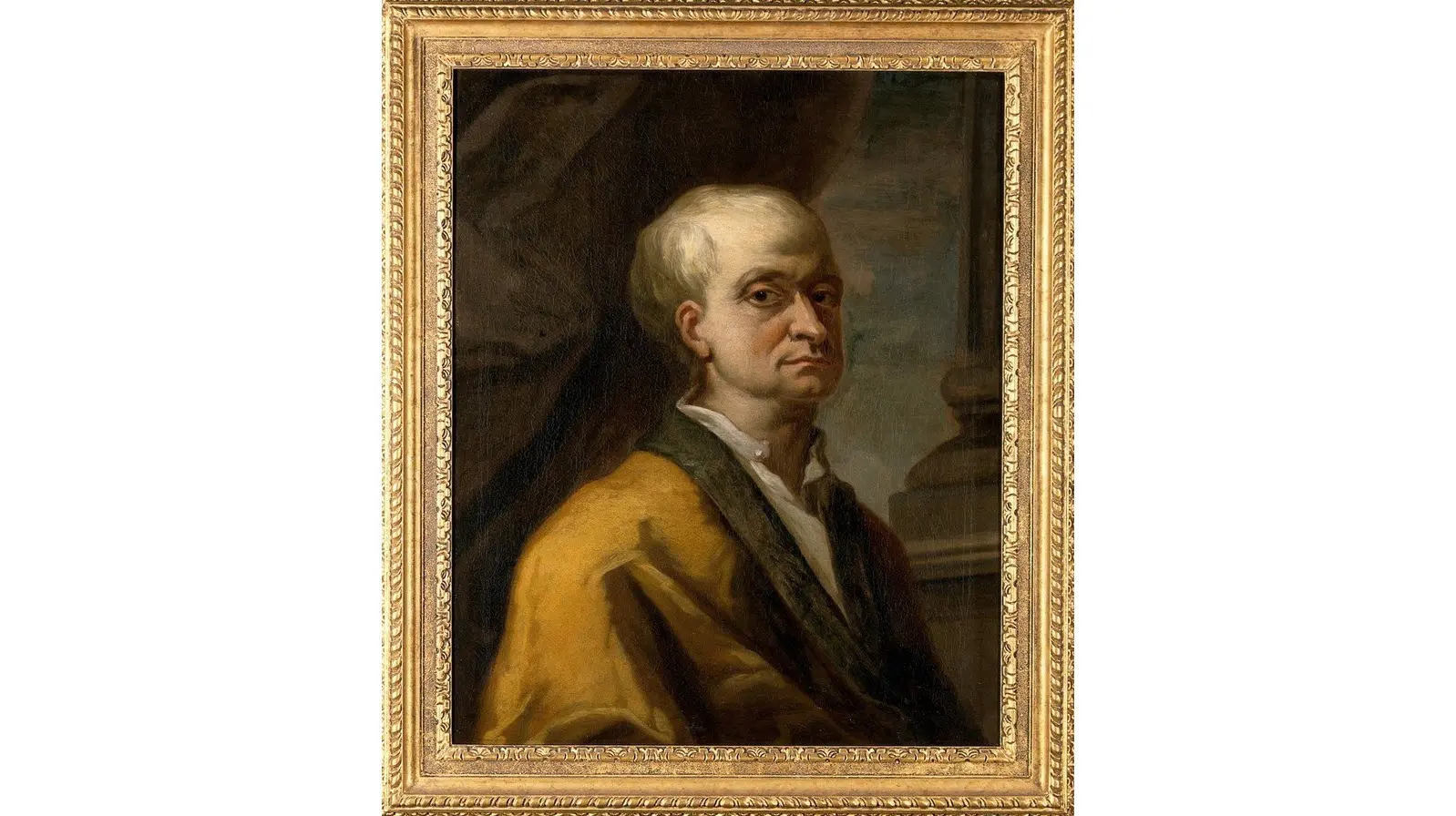

In its new ethical guidance on using artificial intelligence, the Public Relations Society of America says, “AI is not a questioning philosopher such as Socrates, or subject to strikes of genius, such as the moment Isaac Newton saw an apple fall to the ground.”

The first claim restates the obvious and the second repeats a myth promulgated by the scientist himself. Such are the perils of generative AI programs such as ChatGPT: They tell us what everybody knows while confidently slipping in some dubious assertions.

Nonetheless, PRSA, the nation’s leading group for communication professionals, deserves credit for wrestling with ethical issues at a time when many weary PR professionals are rushing to use a new technology that promises to make their work easier.

PRSA’s Board of Ethics and Professional Standards on Nov. 20, 2023, released, “Promise & Pitfalls: The Ethical Use of AI for Public Relations Practitioners.” The report ties its analysis to the organization’s Code of Ethics.

It cites five principles from the code and applies them to specific examples. Like the title character in Oscar Wilde’s “The Canterville Ghost” said, “I hate the cheap severity of abstract ethics.”

The 10-page report offers many practical suggestions on how to properly use AI. It’s a list that would come in handy for professional communicators updating editorial procedures to accommodate the new technology.

We put in our two cents on three ethical examples from the report:

1. Runaway emails. In one example, the report says, “An AI tool used by a public relations firm to automatically generate and personalize email messages is not administered/supervised/monitored by a public relations professional and spreads dated, false or damaging content.”

PRSA recommends that any content generated by AI be checked for accuracy. Of course, email messages with “dated, false or damaging” information are wrong, whether you use AI or not. What makes AI unique is its potential to “automatically generate” hundreds, even thousands, of emails to reporters in a superficially more personalized way than our current tools allow.

Journalists already complain that PR people send too many press releases, which explains why they open less than half of the pitches they receive, according to the fourth quarter report by PR platform Propel Media. The hope is that AI will truly personalize story pitches, cutting down on irrelevant emails.

The email barrage isn’t effective. Is wasting reporters’ time also unethical? Reporters fear that AI will give PR the power to waste even more of it.

2. “Grassroots” campaign. In another example, a PR person who’s working for an undisclosed client coordinates a letter-writing campaign by asking for help, saying, “ChatGPT, please write for me a letter from fifty different people with names similar to those located in a [region of the country or ethnic origin] to legislators in [state] about [issue].”

This conduct is already prohibited by the ethics code, which given how common it is sadly shows how neglected some PRSA standards are. The report uses this example to address a problem it misses in the example above: AI’s power to perform tasks on an unprecedented scale.

“The program will quickly generate content, but doing so may misrepresent actual public sentiment and is dishonest,” the report says. The report’s solution may not be strong enough to fix the problem.

“Use as a grammar checker or editor to review original content from clearly identified, genuine constituents from disclosed interest groups prior to sending to the legislator,” the report says.

3. Author! Author! The report is at its strongest when it addresses this example: “AI writes a blog post about a medical issue and does not use credible sources.”

“Always fact-check data that generative AI provides,” the report says. “AI chat tools can sometimes produce fabricated or inaccurate information.”

The report adds, “An ethical approach to using AI means the PR practitioner is making conscious and informed choices throughout the process. Rather than letting AI solely dictate the content, use the technology as a supplementary tool, guiding it with precise prompts and rigorously editing its outputs.”

This sounds like the Associated Press’ admonition to its staff that content from generative AI “should be treated as unvetted source material.” If public relations professionals want to work with reporters, we must adopt their highest standards.

The report does not address every issue that AI creates. For example, when must the use of AI be disclosed? Or how to prevent plagiarism? Nonetheless, the report makes a valuable contribution, even if it is a bit overdue.

“Newton did not need an apple to remind him that objects fell to earth,” James Gleick wrote in his 2004 biography of the scientist who formulated laws of motion and gravity.

But something needs to hit us on the head to get us thinking about AI. To paraphrase Charlie Chaplin in his 1964 autobiography, the progress of science is far ahead of our ethical behavior.

Tom Corfman, a senior consultant with Ragan Consulting Group, helps communicators keep their fingers out of the gears of machine learning.

Contact our client team to learn more about how we can help you with your communications. Follow RCG on LinkedIn and subscribe to our weekly newsletter here.