What You Need to Know about Biden’s AI Order

Amid government regulation and negative opinions about artificial intelligence, the pressure for disclosure grows.

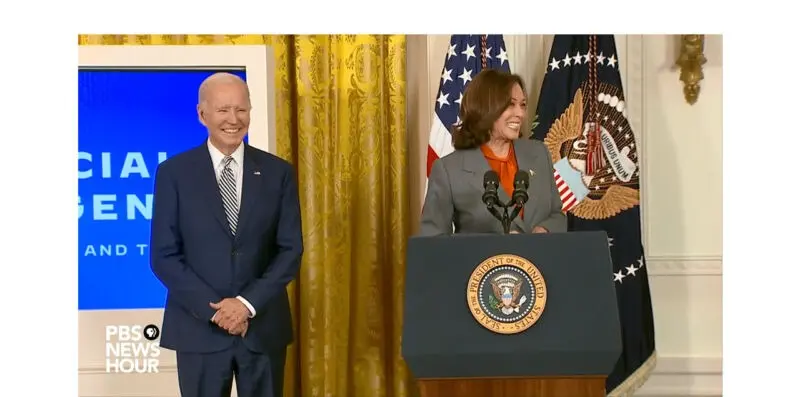

Before Joe Biden signed his sweeping executive order on artificial intelligence last month, his staff showed him a “deep fake” that featured the president.

“I said, ‘When the hell did I say that?’”

It’s a question his comms team has probably asked even without AI.

The first-of-its-kind order, issued Oct. 30, 2023, launches a spree of federal rulemaking and standard-setting. It is aimed at the risk that AI altered text, images and videos could aid terrorists or foreign adversaries or be used to cheat consumers or tilt elections. Tucked into the 20-page document is a call for federal agencies to label AI-generated content.

The order will apply to federal agencies and companies that use the most advanced AI tools. But it also will likely change the private sector’s escalating use of AI.

Biden signed the order as public skepticism about artificial intelligence is growing. Now, a federal rule on disclosure is likely to add to the factors that corporate communicators must weigh when using generative AI, which produces content ranging from text to video. Is disclosure a better practice even if it’s not required?

Regulating AI

The new order also proposes to create safeguards against other risks created by AI, including discrimination against minority groups, unsafe medical practices, invasions of privacy and unfair employment practices. It pulls in the Departments of Defense, Education, Energy, Homeland Security, Justice, State and Transportation.

The order seeks to boost innovation by giving some entrepreneurs access to assistance and resources, creating a pilot research program and expanding immigration of AI experts from other countries.

To reduce the risks of fraud, the order calls on the Commerce Department to identify best practices for federal agencies to label, or “watermark,” AI-generated content. As a result, Biden has drawn the most attention to watermarking since the 13th Century, when Italian papermakers perfected the technique.

In a modern update to the work of papermakers in Fabriano, “current watermarking technologies embed an invisible mark in a piece of content to signal that the material was made by an AI,” according to Tate Ryan-Mosley, a writer for MIT Technology Review.

Yet the Biden Administration is betting on technology that so far has a spotty record.

“Researchers have found that the technique is vulnerable to being tampered with, which can trigger false positives and false negatives,” she writes.

Trust AI?

The order comes as professional communicators are putting their arms around artificial intelligence, especially generative AI, as shown again by a survey released last month by PR platform Notified and PRWeek. Yet in racing to embrace the new technology, are communicators ignoring the public’s increasing skepticism about AI? Consider:

- More than 75% of consumers are concerned about misinformation from generative AI apps such as ChatGPT, according to a Forbes Advisor survey released in July.

- Of those who are familiar with ChatGPT, 81% are concerned about security and safety risks; 63% don’t trust the information it produces, according to a survey by cybersecurity firm Malwarebytes released in June. Only 35% of respondents say they’re familiar with ChatGPT.

- Nearly 70% of people, including 30% of ChatGPT account holders, have little or no trust in products like ChatGPT to provide accurate information, according to a survey by MaristPoll released in April.

It wouldn’t be the first time that public relations people are out of step with public opinion.

Disclosure movement?

Communicators who think Biden’s order won’t affect them are likely to be disappointed.

“While the guidance will be directed to federal agencies, the [executive order] aims to set expectations for the private sector, including by adding requirements in government contracts,” according to lawyers at Mayer Brown, one of the largest law firms in the country.

“I think the idea is if we perhaps deploy this at the federal government level with various agencies, that we’ll see the private sector either take the next step or help us to innovate in that area,” Nicol Turner Lee, director of the Center for Technology Innovation at Brookings Institution, said in an interview.

If communicators are reluctant to disclose their use of generative AI in light of its negative perception, what does that say about using it?

What’s next?

Many questions about the possible federal standards have yet to be answered, such as how much use of AI is necessary to trigger disclosure.

We’ve asked before: Would “futzing with the copy” avoid disclosure?

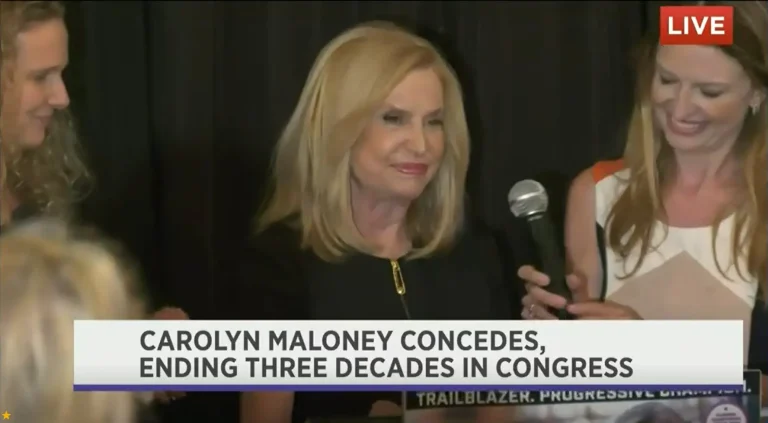

Maybe not. The Associated Press’ standards, released Aug. 16, 2023, encourage its reporters “to exercise due caution and diligence to ensure material coming into AP from other sources is also free of AI-generated content.”

The public’s growing negative opinion of AI amplifies the risks of using it undisclosed in corporate communications. When will social media troublemakers catch a major corporation copying ChatGPT verbatim, resulting in somebody getting a plagiarist’s black eye? Or getting fired?

Generative AI is good for some things, but not originality. What ChatGPT tells you, it’s telling everybody else.

“Using AI to create content will increase the homogeneity of what we collectively produce, even if we try to personalize the output,” according to Kellogg Insight, citing research by Sébastien Martin, a professor at Northwestern University’s management school.

And people want to know they’re communicating with a human.

Nate Sharadin, a research fellow at the Center for AI Safety, wrote in the Bulletin of the Atomic Scientists, “There’s growing interest in enabling human beings to spot the bot.”

Tom Corfman is a senior consultant at Ragan Consulting Group, where he directs the Build Better Writers program, which develops the natural intelligence of communicators.

Contact our client team to learn more about how we can help you with your communications. Follow RCG on LinkedIn and subscribe to our weekly newsletter here.